hi, I need to load the contents of a .txt file into vectors, I already have separate fields, I can't figure out how to load them into memory with vectors I can search on. Now I can work directly on the file but being large, the times are long. With VB6 and a pc you don't notice, with android unfortunately you ...

-

Welcome to B4X forum!

B4X is a set of simple and powerful cross platform RAD tools:

- B4A (free) - Android development

- B4J (free) - Desktop and Server development

- B4i - iOS development

- B4R (free) - Arduino, ESP8266 and ESP32 development

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Android Question load data into memory

- Thread starter lelelor

- Start date

- Similar Threads Similar Threads

grazie, ecco il link

It'd be simpler if you could change the sharing to "anybody with link can access", saves having to request and grant access via email. ✌

Upvote

0

Downloaded okplaced the link again

If you're only reading the data, then I'm still liking regex as a backup plan. Load the entire file into a single string, and then you can search eg:

find products with codes starting with 990122

find products with descriptions containing "MUSIC" (case-sensitive)

find products with no price

with the bonus of product records returned already split up into fields (ie code, description and price) eg:

Last edited:

Upvote

0

At the risk of flogging a dead horse (Italian: pestare l'aqua nel mortaio), I thought I would test the limits of Maps. For those who are still interested, here are my results.

B4X:

Sub testMaps

Dim theLines As List

' Dim rp As RuntimePermissions

' Dim theDir As String = rp.GetSafeDirDefaultExternal

' theLines = File.ReadList(theDir, "myFile.txt")

'The following 4 lines create 160001 test records.

theLines.Initialize

For i = 0 To 160000

theLines.Add(Array As String(1000000000 + i, "abcdefg" & i, i Mod 20))

Next

'At first you might think that Maps would not be able to handle this, but that isn't true, here all values are indexed in one Map

Dim marker As Long = DateTime.Now

Dim indices As Map

indices.Initialize

For i = 0 To theLines.size - 1

Dim fields() As String = theLines.Get(i)

For j = 0 To 2

If indices.ContainsKey(fields(j)) Then

Dim indexSet As List = indices.Get(fields(j))

indexSet.Add(i)

Else

Dim newIndexSet As List 'it is very important to create a new list for each unique entry in the Map

newIndexSet.Initialize

newIndexSet.Add(i)

indices.Put(fields(j), newIndexSet)

End If

Next

Next

Log((DateTime.Now - marker)) 'it took about 800 milliseconds to index all values in the file

'on my Tablet, it takes less than a millisecond to find the one occurrence of the search string

marker = DateTime.Now

Dim searchResults As List = indices.Get("abcdefg" & "12345")

Log((DateTime.Now - marker) & TAB & searchResults.Get(0))

'it took 5 milliseconds to find all 8000 occurrences of a given price "17" (the complete list of indices is one entry)

marker = DateTime.Now

Dim searchResults As List = indices.Get("17")

Log(searchResults.Size)

For Each index As Int In searchResults

Dim aRecord() As String = theLines.Get(index)

'Log(aRecord(0) & TAB & aRecord(1) & TAB & aRecord(2)) 'it takes about 500 millseconds to log them all

Next

Log((DateTime.Now - marker))

'Note: There is a memory limit of course. On my device with 1.3 GB available, up to 600000 records is ok.

' For many real life datasets, this is a simple and very fast solution.

End Sub

Last edited:

Upvote

0

So, we dealing with several issues here (including a language barrier). But that’s ok.

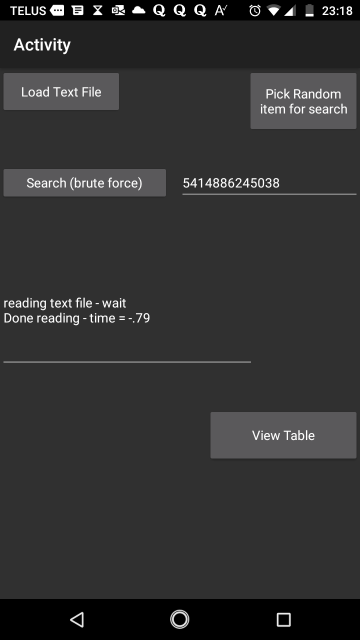

So, with the 10,000 rows example? We found that quite much a brute force. Just read the file, and loop against it? It works rather well. And works without “special” tricks or even special knowledge.

We loaded the text file with some VERY straight forward code:

And to find a match, we using this VERY straight forward code. It looked like what we would write on a old PC or even a old computer running BASIC on some Amstrad computer!:

So no fancy tricks - the above code is VERY similar to VB on the desktop.

But, as we find out? The 10,000 row solution was great, but not for say 150k rows!

So, this problem really not different then any other set of choices we make in a day. For example, we need food, but we can't with ease consume all the food we need in one day at one sitting - so we use 2 or more sittings!

And walking to the store? Well, a few blocks? Sure we can just walk!

But what if the store is 25km away? Well, now we drive or use transportation!

So how far, how big, how much? This process is something we do on a constant basis every day. And it also a process that we do when working with software!

Ok, so lets nail down this issue:

We need these answers:

What is the row count of the final text file - how many rows?

How often do you need to update this list (once a day, once a week, once a month?).

How much do you expect the row count to grow over time?

Bonus questions:

Is your goal to search by any 3 of the columns - or in general just one?

Could your goal in the future is to add MORE information about a given product? So, maybe additional pricing etc.?

Bonus Bonus questions:

Do those numbers represent a bar code? In other words, your phone now days can make quite a decent bar code scanner - all without ANY new hardware!!!

A few years ago, the camera based scanner (and software) was REALLY poor - now they work well!

Now, next up:

Your text file?

REALLY but really here? You probably can attach that file no problem to this tread and post. But PLEASE zip it first!!!

I mean, even when you send some document to someone? you zip it up - since then it is 5 times, or 8 times smaller!

And if you bring me a plate of food for lunch, you put a cover on it - right?

Again, this is just basic curtesy things here - not a huge deal, but they all help.

Edit: - it looks like the g-drive link works - my apologies about the above zip file - just really trying to save time here. Not the end of the world, but zpping files is good advice - even if I was not 100% nice about the advice!

So, try zipping up the text file - I will be it now quite small, and perhaps even within the size limits of posting here.

so in above? The question about how often you need to update this list to a new list copy?

So this information is also important.

next up? I will look at the full text file, and will run and cook up something that works.

But you have make the efforts to zip that text file first.

I don't care if we find some drop box with some huge capacity - I simply don't care!

I simply want the efforts made to provide that file as a zip file - that's how things work on the internet.

Also, before I look at the file - the above questions have to be nailed down.

If the file is too large after zipping to attach here? Then you can zip it up, and email it to me - I WILL take a look at this.

my email you can use is [email protected]

Just remove the PleaseNoSpam from above, and you have my email address!

But, you have to answer the above questions first!

Each of the above questions is a fork in the road, and without answers to the above questions, then I don't know which road to take here - and thus will be wasting my time, and yours! There is little use to drive down 4 different roads, when some directions can cause me to drive down the correct road!! (notice again how this process is about life - not really software!!!).

However, so far? I am near 100% convinced we going to use a database, and thus on application startup? There will be ZERO loading time for the data!

However since I am suggesting database as a solution? Then that is WHY I asking about how often this text file data changes or is to be changed, or how often you need a updated (fresh) copy of that text file.

The more often that file is to be re-loaded (new fresh copy), then the more efforts required for a database solution.

(because I would use desktop tools to create that database - not create on android).

Regards,

Albert D. Kallal

Edmonton, Alberta Canada

So, with the 10,000 rows example? We found that quite much a brute force. Just read the file, and loop against it? It works rather well. And works without “special” tricks or even special knowledge.

We loaded the text file with some VERY straight forward code:

And to find a match, we using this VERY straight forward code. It looked like what we would write on a old PC or even a old computer running BASIC on some Amstrad computer!:

B4X:

Dim bolFound As Boolean = False

Dim lngFoundPostion As Int = 0

For i = 0 To MyList.Size - 1

OneRow = MyList.Get(i)

If OneRow.PNum = txtSearch.Text Then

' got one - stop loop

lngFoundPostion = i

bolFound = True

Exit

End If

Next

If bolFound Then

EditText1.Text = "Found"

End IfSo no fancy tricks - the above code is VERY similar to VB on the desktop.

But, as we find out? The 10,000 row solution was great, but not for say 150k rows!

So, this problem really not different then any other set of choices we make in a day. For example, we need food, but we can't with ease consume all the food we need in one day at one sitting - so we use 2 or more sittings!

And walking to the store? Well, a few blocks? Sure we can just walk!

But what if the store is 25km away? Well, now we drive or use transportation!

So how far, how big, how much? This process is something we do on a constant basis every day. And it also a process that we do when working with software!

Ok, so lets nail down this issue:

We need these answers:

What is the row count of the final text file - how many rows?

How often do you need to update this list (once a day, once a week, once a month?).

How much do you expect the row count to grow over time?

Bonus questions:

Is your goal to search by any 3 of the columns - or in general just one?

Could your goal in the future is to add MORE information about a given product? So, maybe additional pricing etc.?

Bonus Bonus questions:

Do those numbers represent a bar code? In other words, your phone now days can make quite a decent bar code scanner - all without ANY new hardware!!!

A few years ago, the camera based scanner (and software) was REALLY poor - now they work well!

Now, next up:

Your text file?

REALLY but really here? You probably can attach that file no problem to this tread and post. But PLEASE zip it first!!!

I mean, even when you send some document to someone? you zip it up - since then it is 5 times, or 8 times smaller!

And if you bring me a plate of food for lunch, you put a cover on it - right?

Again, this is just basic curtesy things here - not a huge deal, but they all help.

Edit: - it looks like the g-drive link works - my apologies about the above zip file - just really trying to save time here. Not the end of the world, but zpping files is good advice - even if I was not 100% nice about the advice!

So, try zipping up the text file - I will be it now quite small, and perhaps even within the size limits of posting here.

so in above? The question about how often you need to update this list to a new list copy?

So this information is also important.

next up? I will look at the full text file, and will run and cook up something that works.

But you have make the efforts to zip that text file first.

I don't care if we find some drop box with some huge capacity - I simply don't care!

I simply want the efforts made to provide that file as a zip file - that's how things work on the internet.

Also, before I look at the file - the above questions have to be nailed down.

If the file is too large after zipping to attach here? Then you can zip it up, and email it to me - I WILL take a look at this.

my email you can use is [email protected]

Just remove the PleaseNoSpam from above, and you have my email address!

But, you have to answer the above questions first!

Each of the above questions is a fork in the road, and without answers to the above questions, then I don't know which road to take here - and thus will be wasting my time, and yours! There is little use to drive down 4 different roads, when some directions can cause me to drive down the correct road!! (notice again how this process is about life - not really software!!!).

However, so far? I am near 100% convinced we going to use a database, and thus on application startup? There will be ZERO loading time for the data!

However since I am suggesting database as a solution? Then that is WHY I asking about how often this text file data changes or is to be changed, or how often you need a updated (fresh) copy of that text file.

The more often that file is to be re-loaded (new fresh copy), then the more efforts required for a database solution.

(because I would use desktop tools to create that database - not create on android).

Regards,

Albert D. Kallal

Edmonton, Alberta Canada

Last edited:

Upvote

0

Lol at least you recognize that our interest in data structures is bordering on unhealthyFor those who are still interested,

Wouldn't surprise me if it was less than a microsecond, assuming a decent hash function.here are my results.

B4X:'on my Tablet, it takes less than a millisecond to find the one occurrence of the search string

This seems rather long, given that it should just be a single hash lookup returning a pointer to the preconstructed list of indices. Perhaps it is making a copy of the list.B4X:'it took 5 milliseconds to find all 8000 occurrences of a given price "17" (the complete list of indices is one entry)

Last edited:

Upvote

0

@emexes says:

Indeed. I hope the original poster is still with us.

I think the "long" time (less than a blink of the eye) to get the stuff is because I put all values in the Map (160001 x 2 + 20 = 320022 hash entries). Separate Maps for each field would be better.

Lol at least you recognize that our interest in data structures is bordering on unhealthy

Indeed. I hope the original poster is still with us.

I think the "long" time (less than a blink of the eye) to get the stuff is because I put all values in the Map (160001 x 2 + 20 = 320022 hash entries). Separate Maps for each field would be better.

Upvote

0

Ok, first - I going to post a solution - a NICE one. The poster will LOVE this.

Next up? Boy - nice to have some egg on our faces.

Man, rummaging around in my closet for a computing science book! (yes, true!! - did not find that book).

Near bringing in NASA, the the Mars lander team - and suggesting high complex solutions?

Yup - guilty!!!

So, here we are running around with white lab coats? Yup?

Suggesting a database system? Yup - guilty!

You what we REALLY should do?

Let's go back 27 posts!!!! - and do 100% what you asked!!!

Lets read a text file into a array or say a list?

Ok, no problem!!!

This works REALLY fine!!!!

Now I am making "some" fun at myself above? But its actually fair!!!

One really big interesting advantage here? Well, that table is 147,000 rows, but it is VERY narrow!

Most csv files - say from Excel will have 20, 30 columns. You have 3 columns!!! (3!!!!).

So, that is 10x LESS then say a typical table of 30 columns.

Now to be fair - my napkin estimates for time some posts back? They are spot on - but I am seen even better times.

I am now running these in release mode

So, we now have this:

And that is 149,000 rows - the posted file. Well, gee, it less then one second!!!

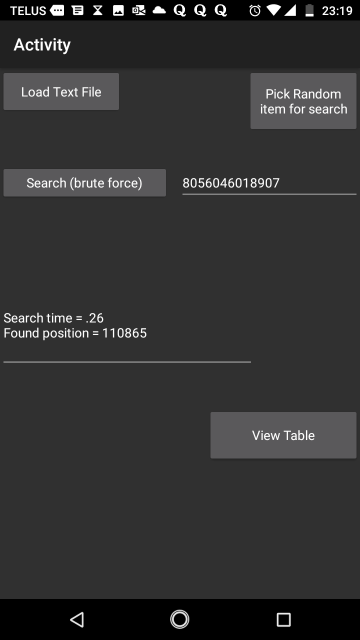

How about a row loop row by row search on 149,000 rows. I have a "random" button in above. It picks between 0-149,000. So, whack a few times untill at least we get a test for over 100k rows. This looks good - 110,000 row - raw loop search:

Well, Bob is your uncle!!! - to raw loop in "basic" code, we find the 110865 position in 0.26 seconds!!!! (2/10th of a second).

so this means we don't need NASA, we don't need my computing science book. And we do not need a database either!!!

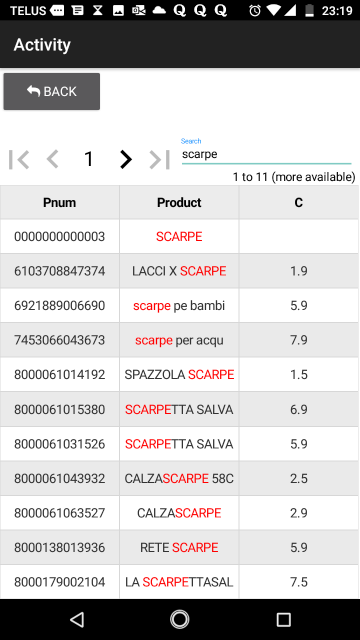

Also note, the view table button in above? I dragged in and dropped in a B4xTable.

Now that is taking 7 seconds to load - I'll consider lazy loading it. But hit view table, and you now get this:

And note the search bar!!!! - i did NOT write ONE line of code for all of the above screen to work!

So the "red" text is matching what you search/type into that box!!!

(it called B4Xtable if you wondering - it is a built in feature.).

So in summary:

B4A - it just rocks - it quite much blew both my shirt and pants off!!!

No NASA, no computing science books. No binary data chop search.

No database either!!!

In a brilliant bout of beautiful tasty egg on my face?

I read a text file - in to a list (that's much like a array).

Now, wait - wait - wait for it!!!

The poster 27 posts back? Can we read a text file into an array or some such?

Why can't I just read a text file?

The answer? yes, you can - and it not all bad of a idea either!!!

Anyone have a Rube Goldberg comic handy?

Hum what should we do? Call NASA? Dig out computing science books?

Perhaps we get the Mars Rover software team involved?

No, what we need to do is read the text file!!!

Now, to be fair? Credit goes to B4A- too much over thinking, too much NASA and computing science being applied here.

Just read a text file into the list. Now you can search it with the first screen, but the B4A table? well, now you can page to next page, type in a search (it's partial match), and it works - and works amazing well!!!

The whole app - including the zipped file is attached. Do remember, you want to run it in release mode - since you find the whole table load, and searching the whole list? Both occur in well under 1 second of time!!!

One little note. You have to include your text file - I did not in the sample posted. Also, your sample has a funny character or who knows what. (but the file was not being seen in B4A correctly. So copy your txt file. I have named it "analperk1.txt" (all lower case).

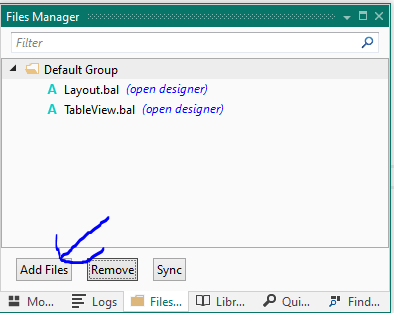

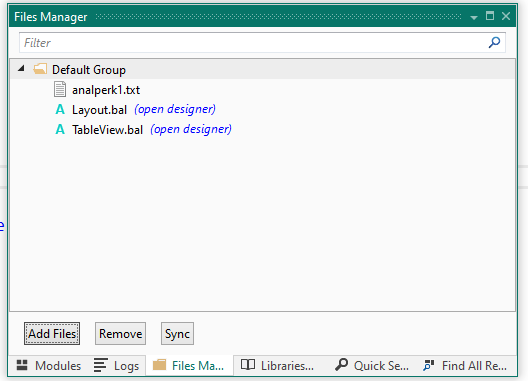

So, after you un-pack the sample? You have to add the analperk1.txt file to the B4A project. From menu, go windows->files manger.

Then here simply choose add files:

Browse to that newly named file (a copy) of your text file - we assume it is now named this: analperk1.txt

So, after adding to above, you see this:

So, after above, then you should be good to go with the sample project.

In Summary:

B4A is showing more performance then I give it credit for.

Loading/reading 150k rows - not out of the question.

Searching with a raw loop againast that "list" row by row? Not a problem!

And better yet, tossing the whole box, and everyting over to B4XTable?

You get a search system, a system to view, look at data.

As noted, there is a significant delay in opening that large table - but we can work that probem.

Summary #2:

This was a lot of fun, and I learned some new things. Learned that while your table was large? It was "narrow" and that was a HUGE help in working out this problem.

The read code for the file? I used this:

So, using a built in "reading" library was nice - it was fast, and easy to do.

And while arrays() are possible, the "list" is easier to use - and it more dynamic (we don't have a re-dim array() like we do in VB, but with "lists", I tend to never miss that feature - and suggest you adopt a list in place of a array - they are easy to use, similar to a array, but better in most cases.

I don't know how much main memory your phone has. Mine is 2 gig. I think a 1 gig main memory phone would work ok.

And my phone has a older a53 CPU - so it again somewhat lower end. If you phone has better specs then above - then you see even better performance.

So try the sample. Just remember to re-add the text file as the new above name.

Regards,

Albert D. Kallal

Edmonton, Alberta Canada

Next up? Boy - nice to have some egg on our faces.

Man, rummaging around in my closet for a computing science book! (yes, true!! - did not find that book).

Near bringing in NASA, the the Mars lander team - and suggesting high complex solutions?

Yup - guilty!!!

So, here we are running around with white lab coats? Yup?

Suggesting a database system? Yup - guilty!

You what we REALLY should do?

Let's go back 27 posts!!!! - and do 100% what you asked!!!

Lets read a text file into a array or say a list?

Ok, no problem!!!

This works REALLY fine!!!!

Now I am making "some" fun at myself above? But its actually fair!!!

One really big interesting advantage here? Well, that table is 147,000 rows, but it is VERY narrow!

Most csv files - say from Excel will have 20, 30 columns. You have 3 columns!!! (3!!!!).

So, that is 10x LESS then say a typical table of 30 columns.

Now to be fair - my napkin estimates for time some posts back? They are spot on - but I am seen even better times.

I am now running these in release mode

So, we now have this:

And that is 149,000 rows - the posted file. Well, gee, it less then one second!!!

How about a row loop row by row search on 149,000 rows. I have a "random" button in above. It picks between 0-149,000. So, whack a few times untill at least we get a test for over 100k rows. This looks good - 110,000 row - raw loop search:

Well, Bob is your uncle!!! - to raw loop in "basic" code, we find the 110865 position in 0.26 seconds!!!! (2/10th of a second).

so this means we don't need NASA, we don't need my computing science book. And we do not need a database either!!!

Also note, the view table button in above? I dragged in and dropped in a B4xTable.

Now that is taking 7 seconds to load - I'll consider lazy loading it. But hit view table, and you now get this:

And note the search bar!!!! - i did NOT write ONE line of code for all of the above screen to work!

So the "red" text is matching what you search/type into that box!!!

(it called B4Xtable if you wondering - it is a built in feature.).

So in summary:

B4A - it just rocks - it quite much blew both my shirt and pants off!!!

No NASA, no computing science books. No binary data chop search.

No database either!!!

In a brilliant bout of beautiful tasty egg on my face?

I read a text file - in to a list (that's much like a array).

Now, wait - wait - wait for it!!!

The poster 27 posts back? Can we read a text file into an array or some such?

Why can't I just read a text file?

The answer? yes, you can - and it not all bad of a idea either!!!

Anyone have a Rube Goldberg comic handy?

Hum what should we do? Call NASA? Dig out computing science books?

Perhaps we get the Mars Rover software team involved?

No, what we need to do is read the text file!!!

Now, to be fair? Credit goes to B4A- too much over thinking, too much NASA and computing science being applied here.

Just read a text file into the list. Now you can search it with the first screen, but the B4A table? well, now you can page to next page, type in a search (it's partial match), and it works - and works amazing well!!!

The whole app - including the zipped file is attached. Do remember, you want to run it in release mode - since you find the whole table load, and searching the whole list? Both occur in well under 1 second of time!!!

One little note. You have to include your text file - I did not in the sample posted. Also, your sample has a funny character or who knows what. (but the file was not being seen in B4A correctly. So copy your txt file. I have named it "analperk1.txt" (all lower case).

So, after you un-pack the sample? You have to add the analperk1.txt file to the B4A project. From menu, go windows->files manger.

Then here simply choose add files:

Browse to that newly named file (a copy) of your text file - we assume it is now named this: analperk1.txt

So, after adding to above, you see this:

So, after above, then you should be good to go with the sample project.

In Summary:

B4A is showing more performance then I give it credit for.

Loading/reading 150k rows - not out of the question.

Searching with a raw loop againast that "list" row by row? Not a problem!

And better yet, tossing the whole box, and everyting over to B4XTable?

You get a search system, a system to view, look at data.

As noted, there is a significant delay in opening that large table - but we can work that probem.

Summary #2:

This was a lot of fun, and I learned some new things. Learned that while your table was large? It was "narrow" and that was a HUGE help in working out this problem.

The read code for the file? I used this:

B4X:

Dim su As StringUtils

ProgressBar1.Visible = True

EditText1.Text = "running"

Sleep(0)

MyList.Initialize

Dim t1 As Long = DateTime.Now

EditText1.Text = "reading text file - wait" & CRLF

Sleep(0)

MyList = su.LoadCSV(File.DirAssets,"analperk1.txt", ",")

EditText1.Text = EditText1.Text & "Done reading - time = " & NumberFormat((t1 - DateTime.Now)/1000,0,2) & CRLF

ProgressBar1.Visible = FalseAnd while arrays() are possible, the "list" is easier to use - and it more dynamic (we don't have a re-dim array() like we do in VB, but with "lists", I tend to never miss that feature - and suggest you adopt a list in place of a array - they are easy to use, similar to a array, but better in most cases.

I don't know how much main memory your phone has. Mine is 2 gig. I think a 1 gig main memory phone would work ok.

And my phone has a older a53 CPU - so it again somewhat lower end. If you phone has better specs then above - then you see even better performance.

So try the sample. Just remember to re-add the text file as the new above name.

Regards,

Albert D. Kallal

Edmonton, Alberta Canada

Attachments

Upvote

0

In Summary:

B4A is showing more performance than I give it credit for.

LolSummary #2:

This was a lot of fun, and I learned some new things.

Difficult to get much simpler than SerialBuffer = SerialBuffer & s. I used to worry about efficiency, but when I actually tested the speed of Java string operations, I was surprised. They are amazingly fast. Erel was correct, asalwaysmostly.

Upvote

0

Similar Threads

- Replies

- 1

- Views

- 485

- Locked

- Article

- Replies

- 28

- Views

- 56K

- Replies

- 11

- Views

- 10K

- Question

Android Question

Crash after Days - do i have a Memory-Problem?

- Replies

- 10

- Views

- 2K

- Replies

- 2

- Views

- 2K