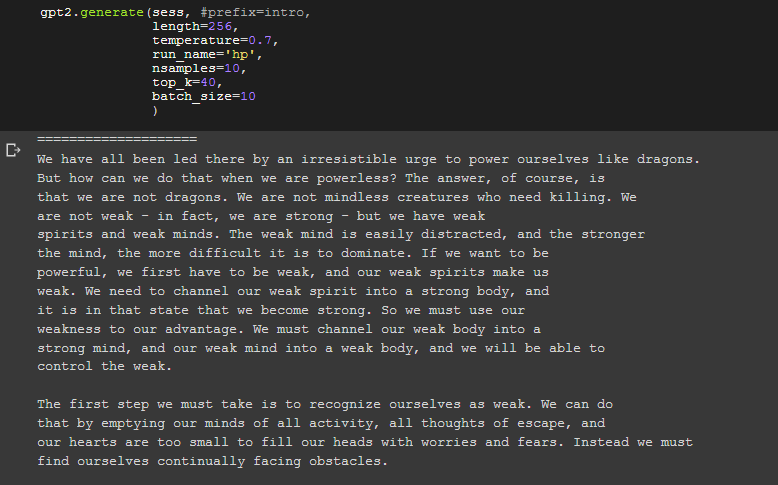

So I've been fine-tuning a GPT-2 (345M) model with Harry Potter books.

It generated this

Conclusion: The deeper the network, the deeper the bullsh*t!

It generated this

Conclusion: The deeper the network, the deeper the bullsh*t!