TensorFlowLite - an experimental machine/deep learning wrapper for B4A

New version: 0.20 (29/08/2018):

I have updated the library and the sample-models because the first version was based on older code.

I attach also the updated java-sources.

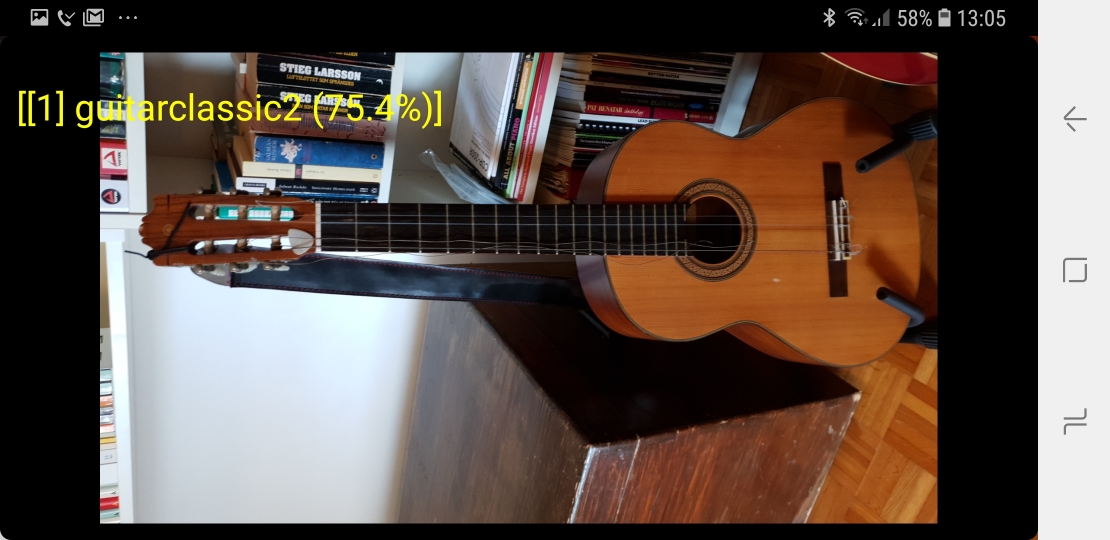

I also created my own model to use with the wrapper which works really well. I have put some links to some good resources/tutorials. See the Spoiler for some screenshots using my guitar-model.

If you used the first version, please update the demo and the B4A-libs.

After a recent gallstone operation, I am now at home for a week or so before it's time to go back to work. So I am using this "free time" to do some funny and interesting stuff with B4A.

I started playing around with TensorFlowLite for Android/B4A and came up with this experimental wrapper based on various examples found on the internet.

First some background (from the TensorFlow website):

What is TensorFlow?

TensorFlow™ is an open source software library for high performance numerical computation. Its flexible architecture allows easy deployment of computation across a variety of platforms (CPUs, GPUs, TPUs), and from desktops to clusters of servers to mobile and edge devices. Originally developed by researchers and engineers from the Google Brain team within Google’s AI organization, it comes with strong support for machine learning and deep learning and the flexible numerical computation core is used across many other scientific domains.

What is TensorflowLite?

TensorFlow was designed to be a good deep learning solution for mobile platforms. As such, TensorFlowLite provides better performance and a small binary size on mobile platforms as well as the ability to leverage hardware acceleration if available on their platforms. In addition, it has many fewer dependencies so it can be built and hosted on simpler, more constrained device scenarios. TensorFlowLite also allows targeting accelerators through the Neural Networks API.

PS: There is also another implementation for mobile, namely TensorFlow Mobile, which currently has more functionaility than TensorflowLite, but as far as I have understood, it will eventually be replaced with TensorflowLite which has a smaller binary size, fewer dependencies, and better performance.

You can read more here:

https://www.tensorflow.org/

The user scenarios can be numerous. This wrapper (and the demo-app) provided by me lets you to take a picture which TensorFlowLite will then analyze and try to figure out what object it is. The objects suggested can be more than one and therefore they are sorted per a confidence-score. This can be done because TensorFlowLite is analyzing the image against a predefined model (a sort of classifier, graph), which has been trained to recognize certain objects. In this demo, I am using a very generic sample-model created by Google and which recognizes various objects (see the list attached).

More importantly, you can create and train your own models, specifically trained to perhaps recognize animals, cars etc and let you get far better results in terms of accuracy. I have also created my own model which recognizes my guitars. I set up what needed on my Mac and created a model in about 30 minutes. You can find instructions on TensorFlow web-site and of course on YouTube. I also recommend the following two Codelabs (tutorials) by Google: tensorflow-for-poets and tensorflow-for-poets-2-tflite-android.

How to use the wrapper in your app?

1) The official TensorFlowLite library by Google is being developed continuously and perhaps future libraries may not work with my current implementation. Therefore, for this wrapper you will need to download the following version (1.10) from here:

https://tinyurl.com/y9jlc59w

and copy it to your additional library folder.

2) In this wrapper, I am also using the Guava IO Library which you can download from here:

https://github.com/google/guava/releases/download/v26.0/guava-26.0-android.jar

Then copy it to your additional library folder.

3) Download and extract the attached TensorFlowLite library wrapper and its XML-file and copy them to your additional library folder.

4) Finally, you will of course need a model (classifier). In this case, for the demo-app, you need to download the file "assets.zip" from here:

https://www.dropbox.com/s/keuwqr8fys1lx8m/assets.zip?dl=0

and extract its content and copy the files to your app's assets-folder. The easiest way to do this is just to add the files using B4A's Files Manager. The demo-app is attached as well. It is basically Erel's Camera2 sample-app which I have stripped down to its bare minimum adding the TensorFlowLite functionality.

5) Look at the code in the demo-app and you can see how quick and easy it is to implement this wrapper in your own apps.

The wrapper exposes only two methods, namely:

-Initialize

which initializes TensorflowLite. Reads Model-file (tflite) and label-file from Assets-folder. Default value of Input-size is 224.

-recognizeImage

which requests TensoflowLite/classifier to recognize bitmap/image and to return possible results.

Note: the minSdkVersion for the demo-app is 21, probably because Camera2 requires this. If you don't use Camera2 in your app, then you can probably use a much lower minSdkVersion. It should work with at least minSdkVersion 15 but I read somewhere it might even work with minSdkVersion 4(!) although I haven't tried. You will need to experiment.

Note2: I have added the Java-sources too if someone would like to add/change functionality or maybe keep the wrapper updated and in line with newer future releases of TensorFlowLite.

Note3: Combined wih @JordiCP's excellent wrapper of OpenCV, I think you have a good base to really come up with some really nice stuff (although in this case the TensorFlowLite wrapper might need to be customized for your needs).

Ideas for improvements:

-Implement real-time object detection/recognition using the video-camera.

-Use cropping to let TensorFlowLite better analyze an image with multiple objects. Contemporary multiple object recognition is not supported in my wrapper.

Please remember that creating libraries and maintaining them takes time and so does supporting them. Please consider a donation if you use my free libraries as this will surely help keeping me motivated. Thank you!

Enjoy!

New version: 0.20 (29/08/2018):

I have updated the library and the sample-models because the first version was based on older code.

I attach also the updated java-sources.

I also created my own model to use with the wrapper which works really well. I have put some links to some good resources/tutorials. See the Spoiler for some screenshots using my guitar-model.

If you used the first version, please update the demo and the B4A-libs.

After a recent gallstone operation, I am now at home for a week or so before it's time to go back to work. So I am using this "free time" to do some funny and interesting stuff with B4A.

I started playing around with TensorFlowLite for Android/B4A and came up with this experimental wrapper based on various examples found on the internet.

First some background (from the TensorFlow website):

What is TensorFlow?

TensorFlow™ is an open source software library for high performance numerical computation. Its flexible architecture allows easy deployment of computation across a variety of platforms (CPUs, GPUs, TPUs), and from desktops to clusters of servers to mobile and edge devices. Originally developed by researchers and engineers from the Google Brain team within Google’s AI organization, it comes with strong support for machine learning and deep learning and the flexible numerical computation core is used across many other scientific domains.

What is TensorflowLite?

TensorFlow was designed to be a good deep learning solution for mobile platforms. As such, TensorFlowLite provides better performance and a small binary size on mobile platforms as well as the ability to leverage hardware acceleration if available on their platforms. In addition, it has many fewer dependencies so it can be built and hosted on simpler, more constrained device scenarios. TensorFlowLite also allows targeting accelerators through the Neural Networks API.

PS: There is also another implementation for mobile, namely TensorFlow Mobile, which currently has more functionaility than TensorflowLite, but as far as I have understood, it will eventually be replaced with TensorflowLite which has a smaller binary size, fewer dependencies, and better performance.

You can read more here:

https://www.tensorflow.org/

The user scenarios can be numerous. This wrapper (and the demo-app) provided by me lets you to take a picture which TensorFlowLite will then analyze and try to figure out what object it is. The objects suggested can be more than one and therefore they are sorted per a confidence-score. This can be done because TensorFlowLite is analyzing the image against a predefined model (a sort of classifier, graph), which has been trained to recognize certain objects. In this demo, I am using a very generic sample-model created by Google and which recognizes various objects (see the list attached).

More importantly, you can create and train your own models, specifically trained to perhaps recognize animals, cars etc and let you get far better results in terms of accuracy. I have also created my own model which recognizes my guitars. I set up what needed on my Mac and created a model in about 30 minutes. You can find instructions on TensorFlow web-site and of course on YouTube. I also recommend the following two Codelabs (tutorials) by Google: tensorflow-for-poets and tensorflow-for-poets-2-tflite-android.

How to use the wrapper in your app?

1) The official TensorFlowLite library by Google is being developed continuously and perhaps future libraries may not work with my current implementation. Therefore, for this wrapper you will need to download the following version (1.10) from here:

https://tinyurl.com/y9jlc59w

and copy it to your additional library folder.

2) In this wrapper, I am also using the Guava IO Library which you can download from here:

https://github.com/google/guava/releases/download/v26.0/guava-26.0-android.jar

Then copy it to your additional library folder.

3) Download and extract the attached TensorFlowLite library wrapper and its XML-file and copy them to your additional library folder.

4) Finally, you will of course need a model (classifier). In this case, for the demo-app, you need to download the file "assets.zip" from here:

https://www.dropbox.com/s/keuwqr8fys1lx8m/assets.zip?dl=0

and extract its content and copy the files to your app's assets-folder. The easiest way to do this is just to add the files using B4A's Files Manager. The demo-app is attached as well. It is basically Erel's Camera2 sample-app which I have stripped down to its bare minimum adding the TensorFlowLite functionality.

5) Look at the code in the demo-app and you can see how quick and easy it is to implement this wrapper in your own apps.

The wrapper exposes only two methods, namely:

-Initialize

which initializes TensorflowLite. Reads Model-file (tflite) and label-file from Assets-folder. Default value of Input-size is 224.

-recognizeImage

which requests TensoflowLite/classifier to recognize bitmap/image and to return possible results.

Note: the minSdkVersion for the demo-app is 21, probably because Camera2 requires this. If you don't use Camera2 in your app, then you can probably use a much lower minSdkVersion. It should work with at least minSdkVersion 15 but I read somewhere it might even work with minSdkVersion 4(!) although I haven't tried. You will need to experiment.

Note2: I have added the Java-sources too if someone would like to add/change functionality or maybe keep the wrapper updated and in line with newer future releases of TensorFlowLite.

Note3: Combined wih @JordiCP's excellent wrapper of OpenCV, I think you have a good base to really come up with some really nice stuff (although in this case the TensorFlowLite wrapper might need to be customized for your needs).

Ideas for improvements:

-Implement real-time object detection/recognition using the video-camera.

-Use cropping to let TensorFlowLite better analyze an image with multiple objects. Contemporary multiple object recognition is not supported in my wrapper.

Please remember that creating libraries and maintaining them takes time and so does supporting them. Please consider a donation if you use my free libraries as this will surely help keeping me motivated. Thank you!

Enjoy!

Attachments

Last edited: