Hi all, I wanted to get everyone's suggestions or ideas about what the more efficient way of retrieving all the data from an ExpressSQL server that is running on an old PC, This PC unfortunately can not be connected to the network as it is not supported by our I.T. department.

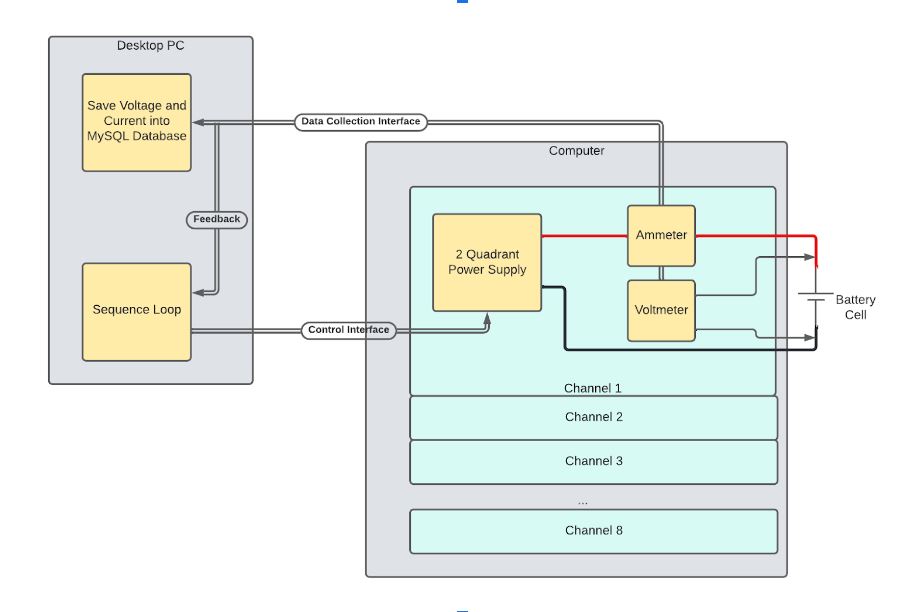

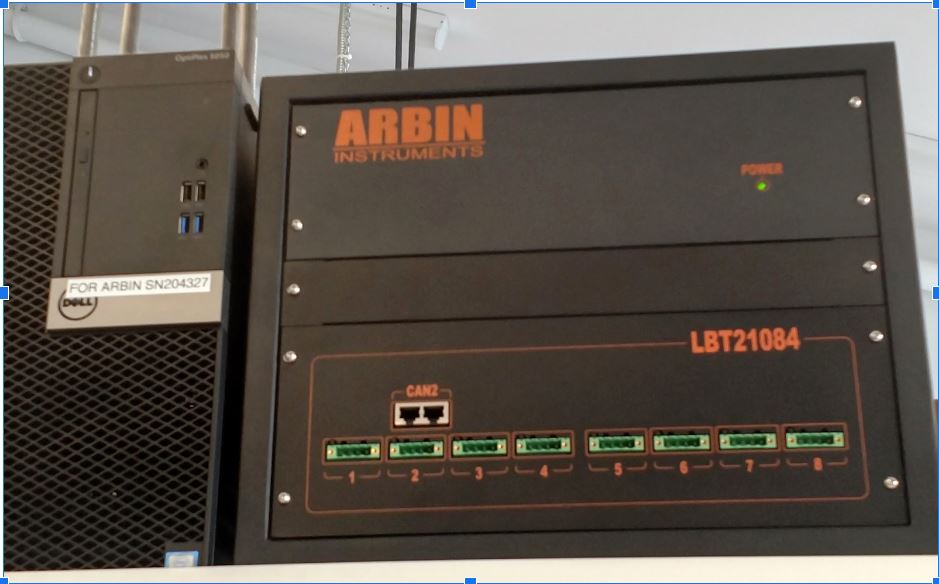

This PC is connected to an Arbin Battery Test System which runs constantly 24/7 collecting information on different types of batteries. At the moment the only way we have of retrieving the data collected is by Connecting an Ethernet cable directly from a laptop to the P.C. and using the Remote Desktop Client, then we run a macro inside an Excel workbook provided by the manufacturer of the Arbin system, which does different queries on the Master Database to retrieve the specific data pertaining to a specific test running.

The requirement now is the following:

I have created a B4J Server which is running on the old PC running windows 7. This server opens a connection to the local ExpressSQL server running on the PC.

I have also created another B4J app which runs on my Dell Laptop, this app sends HTTP requests to the B4J server, The server processes these requests and sends queries to the ExpressSQL server to retrieve the data from the Master Database. The queries consist of selecting certain data from specific tables and sending the results back to the B4J app running on my laptop.

The problem I am facing now is that some tables contain up to 7 Gigs of data if not more, so what I am doing on the B4J server side is that I am writing each row of the results to a csv file which gets saved to a shared folder, but as you all can imagine querying that much data results in a out of memory exception, not to mention that it can take a very long time to process said query. The PC running Windows 7 only has 8G of RAM.

The out of memory exceptions happen while I try to iterate through the Results, one thing I have thought about is iterate through chunks of the results, for example iterate through the first 10k rows, write those rows to the csv file, then iterate through the next 10k rows etc.

The idea is that once all the results from any query have been saved to the csv file, the B4J app running on my Dell laptop will read those files, upload those files to a storage bucket, then insert the data from those files to bigquery tables under the same names.

I hope this makes sense, what I am hoping here, is to know any suggestions or any other ideas about how else can this be accomplished, I have been working on this project on and off, but would love to hear other people's approach or how you guys would tackle this issue. The main purpose for this is basically to have the B4J app running on my laptop run at least once or twice a day and update on the cloud so that other engineers can access this data without having to manually go into the Arbin's PC.

Thanks in advance everyone, can't wait to hear other people's opinions on this.

Walter

This PC is connected to an Arbin Battery Test System which runs constantly 24/7 collecting information on different types of batteries. At the moment the only way we have of retrieving the data collected is by Connecting an Ethernet cable directly from a laptop to the P.C. and using the Remote Desktop Client, then we run a macro inside an Excel workbook provided by the manufacturer of the Arbin system, which does different queries on the Master Database to retrieve the specific data pertaining to a specific test running.

The requirement now is the following:

- Retrieve the data stored in the Main Database which runs in an old PC running windows 7 that can not be connected to the network.

- Upload the Data to bigquery tables.

I have created a B4J Server which is running on the old PC running windows 7. This server opens a connection to the local ExpressSQL server running on the PC.

I have also created another B4J app which runs on my Dell Laptop, this app sends HTTP requests to the B4J server, The server processes these requests and sends queries to the ExpressSQL server to retrieve the data from the Master Database. The queries consist of selecting certain data from specific tables and sending the results back to the B4J app running on my laptop.

The problem I am facing now is that some tables contain up to 7 Gigs of data if not more, so what I am doing on the B4J server side is that I am writing each row of the results to a csv file which gets saved to a shared folder, but as you all can imagine querying that much data results in a out of memory exception, not to mention that it can take a very long time to process said query. The PC running Windows 7 only has 8G of RAM.

The out of memory exceptions happen while I try to iterate through the Results, one thing I have thought about is iterate through chunks of the results, for example iterate through the first 10k rows, write those rows to the csv file, then iterate through the next 10k rows etc.

The idea is that once all the results from any query have been saved to the csv file, the B4J app running on my Dell laptop will read those files, upload those files to a storage bucket, then insert the data from those files to bigquery tables under the same names.

I hope this makes sense, what I am hoping here, is to know any suggestions or any other ideas about how else can this be accomplished, I have been working on this project on and off, but would love to hear other people's approach or how you guys would tackle this issue. The main purpose for this is basically to have the B4J app running on my laptop run at least once or twice a day and update on the cloud so that other engineers can access this data without having to manually go into the Arbin's PC.

Thanks in advance everyone, can't wait to hear other people's opinions on this.

Walter

Last edited: